Welcome to “The Essential Hardware for AI Applications”! In this article, you will discover the key components necessary for powering artificial intelligence applications. From central processing units (CPUs) to graphics processing units (GPUs) to neural network accelerator chips, each piece of hardware plays a vital role in enabling AI algorithms to process data and learn from it. By understanding the essential hardware for AI applications, you will be equipped to delve deeper into the world of artificial intelligence and harness its potential for innovation and advancement. So let’s dive in and explore the technological foundation that fuels the future of AI!

The Essential Hardware for AI Applications

Are you interested in diving into the world of Artificial Intelligence (AI) but unsure of what hardware you need to get started? Look no further! In this article, we will break down the essential hardware components needed for AI applications and explain their importance in helping you unleash the full potential of AI technology.

What hardware is needed for AI?

When it comes to AI applications, having the right hardware is crucial to ensuring optimal performance and efficiency. From powerful processors to high-capacity storage, each component plays a vital role in processing complex algorithms and data sets. Let’s take a closer look at the essential hardware needed for AI applications.

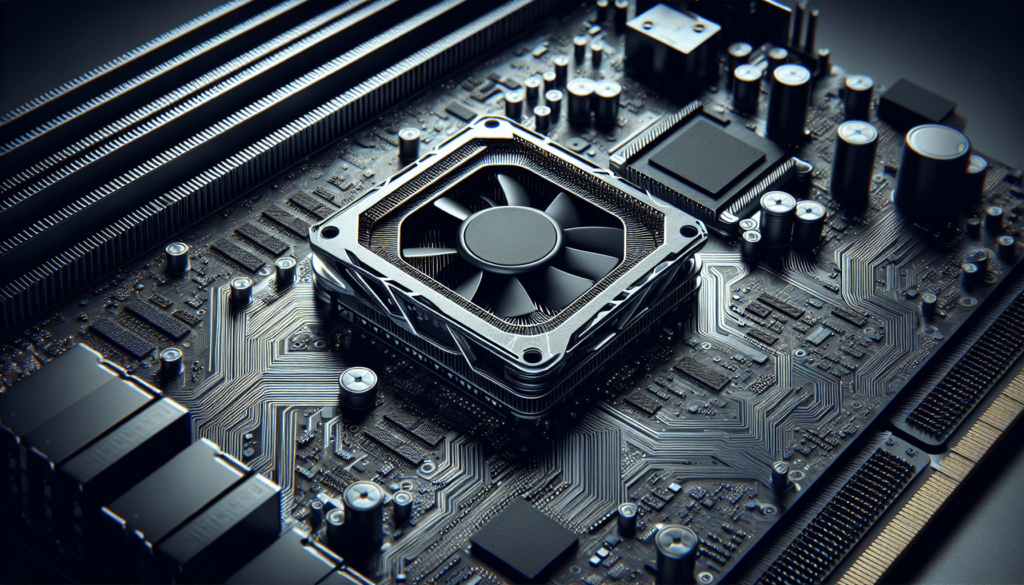

Central Processing Unit (CPU)

The CPU is often referred to as the brain of the computer as it is responsible for executing instructions and performing calculations. When it comes to AI applications, having a powerful CPU is essential for processing complex algorithms and training machine learning models. Look for CPUs with multi-core processors and high clock speeds to ensure smooth performance.

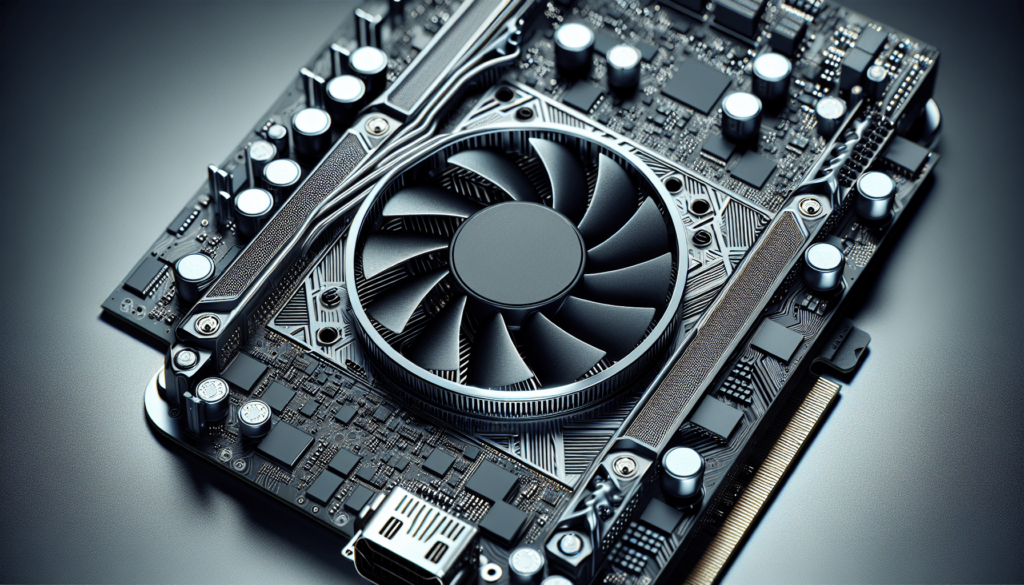

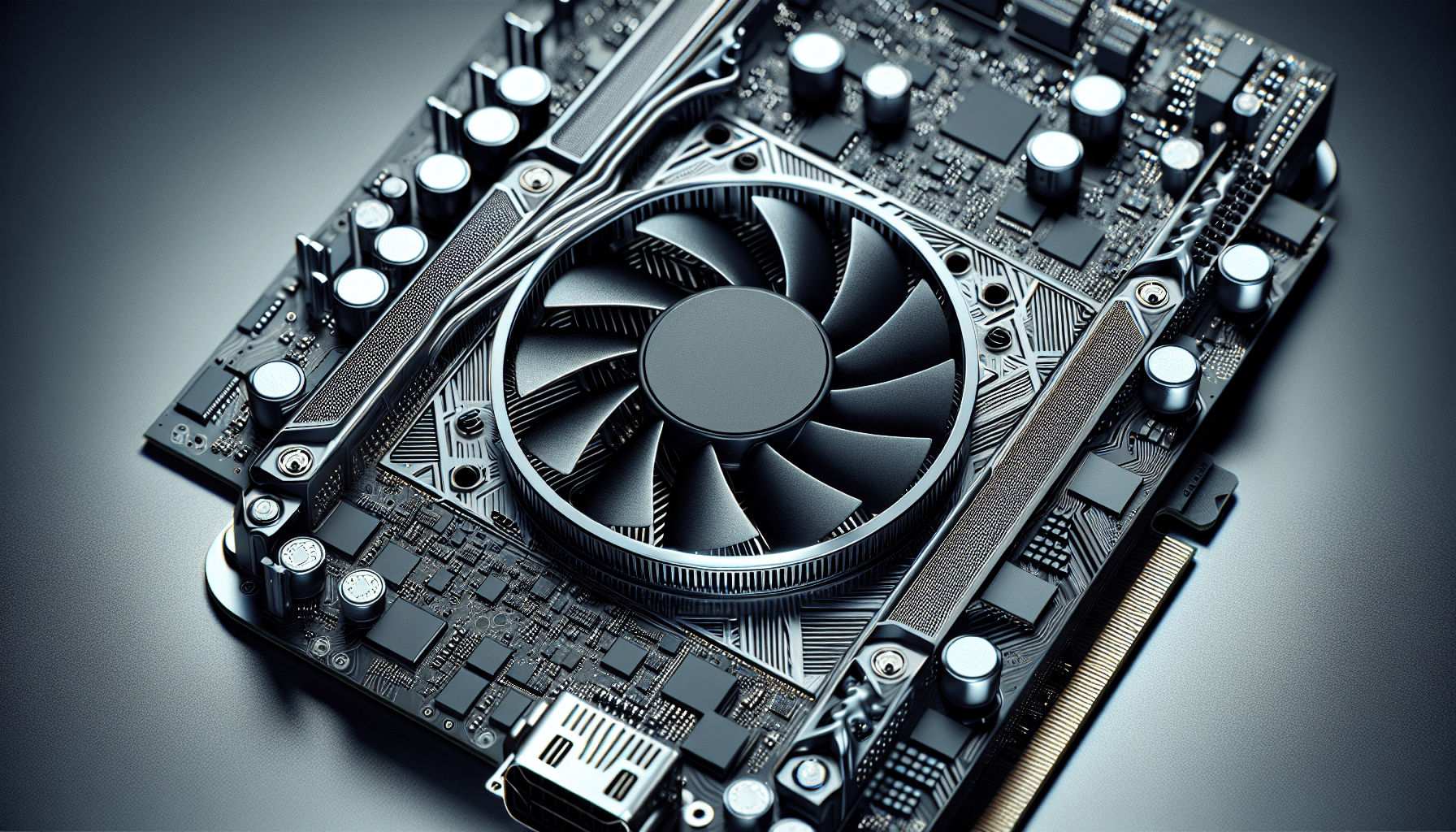

Graphics Processing Unit (GPU)

While CPUs are essential for general computing tasks, GPUs are specifically designed to handle parallel processing tasks, making them ideal for AI applications. GPUs excel at accelerating deep learning algorithms and training neural networks, making them indispensable for tasks such as image and speech recognition. When choosing a GPU for AI applications, look for models with a high number of CUDA cores and memory bandwidth for optimal performance.

Field-Programmable Gate Arrays (FPGAs)

FPGAs are hardware components that can be reprogrammed to perform specific tasks efficiently. In the realm of AI, FPGAs are often used to accelerate specific algorithms and tasks, such as image processing and data encryption. FPGAs are highly customizable and can be optimized for specific AI workloads, making them a valuable addition to any AI hardware setup.

Tensor Processing Units (TPUs)

TPUs are custom-built hardware accelerators designed by Google specifically for machine learning tasks. These specialized processing units are optimized for TensorFlow, one of the most popular frameworks for machine learning and deep learning applications. TPUs are known for their high performance and efficiency, making them a preferred choice for AI applications that rely heavily on TensorFlow.

Memory

In AI applications, having ample memory is essential for storing and accessing large data sets efficiently. Look for systems with high-capacity RAM to ensure smooth performance when training machine learning models and running complex algorithms. Additionally, consider investing in fast storage options such as solid-state drives (SSDs) to reduce data access times and improve overall system performance.

Networking

Efficient networking is crucial for AI applications that require the processing of large data sets and the coordination of multiple computing resources. Look for high-speed network interfaces such as Ethernet or InfiniBand to ensure seamless communication between different hardware components. Additionally, consider setting up a distributed computing environment to maximize resource utilization and scalability for larger AI workloads.

Cooling Systems

AI applications can put a significant strain on hardware components, leading to increased heat generation and the risk of thermal throttling. Investing in effective cooling systems such as liquid cooling or high-performance fans is essential to maintain optimal operating temperatures for CPUs, GPUs, and other hardware components. Proper cooling not only ensures long-term hardware reliability but also improves overall system performance and efficiency.

Power Supply

AI hardware setups can be power-hungry due to the high processing demands of machine learning algorithms and deep neural networks. It is essential to choose a high-quality power supply unit (PSU) that can deliver stable power to all components, especially under heavy workloads. Look for PSUs with high efficiency ratings and ample wattage to ensure reliable performance and prevent system failures due to power issues.

Conclusion

In conclusion, the hardware requirements for AI applications are diverse and demanding, requiring a careful selection of components to ensure optimal performance and efficiency. By investing in the right hardware components such as powerful CPUs, GPUs, FPGAs, TPUs, ample memory, efficient networking, cooling systems, and reliable power supplies, you can create a robust AI infrastructure capable of handling complex algorithms and data sets with ease. Remember to consider your specific AI workloads and performance requirements when choosing hardware components to maximize the potential of AI technology in your applications.