Welcome to a brief exploration into the world of perceptrons! In this article, you will gain a basic understanding of what a perceptron is and how it functions. Whether you are a student curious about machine learning or simply interested in expanding your knowledge, read on to discover the key features of this fundamental concept. By the end, you will have a better grasp on how perceptrons play a role in artificial intelligence and decision-making processes. Let’s dive in and unravel the mystery behind perceptrons together!

Understanding Perceptrons

Have you ever heard of perceptrons and wondered what they are and how they work? understanding perceptrons can be a key stepping stone into the world of artificial intelligence and machine learning. Let’s dive into the world of perceptrons and unravel their mysteries.

What is a Perceptron?

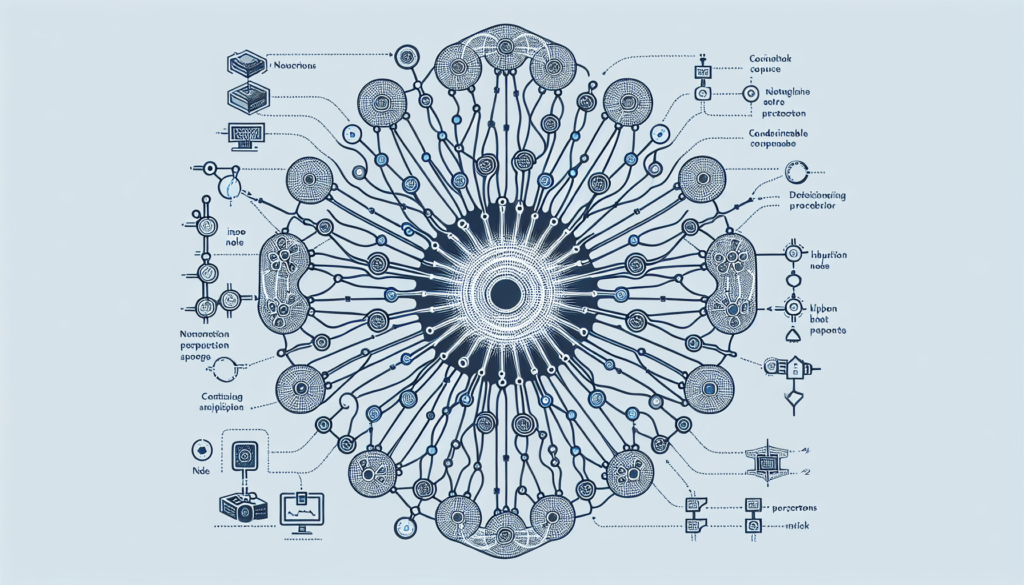

A perceptron is a type of artificial neural network that serves as a building block for more complex neural networks. It is a simple algorithm that can make binary decisions based on input data. Think of it as the basic unit of computation in a neural network.

Perceptrons were first introduced by Frank Rosenblatt in the late 1950s and are often used in binary classification tasks. They take several input values, apply weights to these inputs, sum them up, and then pass the result through an activation function to produce an output.

How Does a Perceptron Work?

Imagine a perceptron as a single neuron in a human brain. It takes input from multiple sources, processes that information, and produces an output. A key component of a perceptron is the activation function, which determines whether the neuron “fires” or not based on the weighted sum of inputs.

Here’s a simplified breakdown of how a perceptron works:

- Input: The perceptron receives input signals (x1, x2, …, xn) along with corresponding weights (w1, w2, …, wn).

- Weighted Sum: It calculates the weighted sum of inputs and weights as follows:

z = w1*x1 + w2*x2 + ... + wn*xn. - Activation Function: The perceptron applies an activation function (often a step function or sigmoid function) to the weighted sum (z) to produce the output:

output = activation(z).

Perceptron Model

In mathematical terms, we can represent a perceptron using the following formula:

output = activation(w1x1 + w2x2 + … + wn*xn)

Where:

-

outputis the final output of the perceptron. -

w1, w2, ..., wnare the weights assigned to input values. -

x1, x2, ..., xnare input values. -

activationis the activation function applied to the weighted sum.

Training a Perceptron

Training a perceptron involves adjusting the weights of inputs to minimize errors in predicting outcomes. This process is known as the perceptron learning algorithm and follows these steps:

- Initialize Weights: Start by assigning random weights to input values.

- Calculate Output: Give input to the perceptron and calculate the output using the activation function.

- Compare Output: Compare the predicted output with the actual output and calculate the error.

- Update Weights: Adjust the weights based on the error to improve the accuracy of predictions.

- Repeat: Repeat the process with new input data until the model reaches the desired level of accuracy.

Perceptron as a Linear Classifier

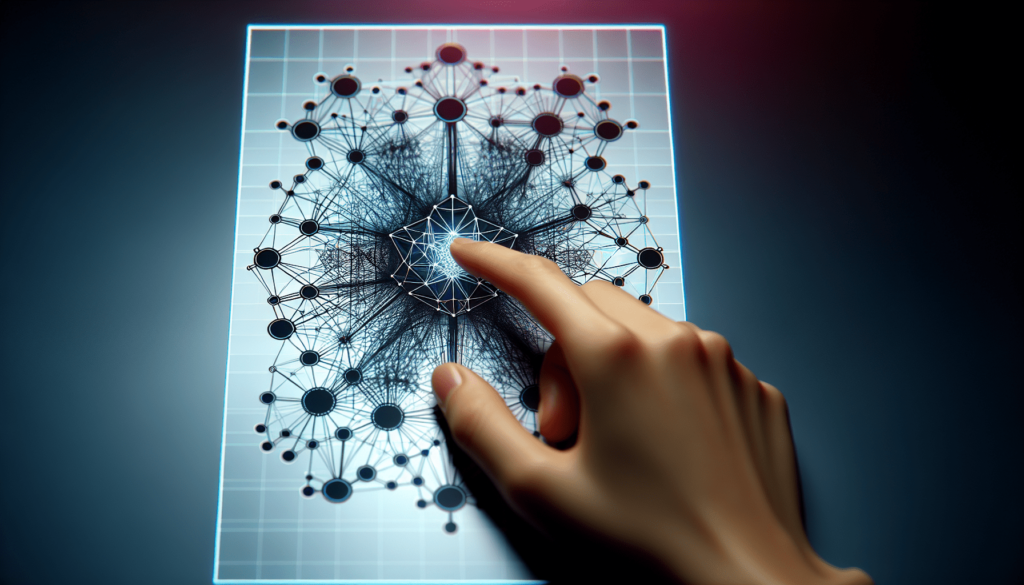

One of the key applications of perceptrons is in binary classification tasks. A perceptron can act as a linear classifier, separating input data into two classes based on a decision boundary.

For example, consider a perceptron with two input features (x1, x2) and weights (w1, w2). The decision boundary is determined by the equation:

w1x1 + w2x2 = threshold

If the weighted sum is greater than the threshold, the perceptron outputs one class; otherwise, it outputs the other class.

Perceptron Learning Rule

The perceptron learning algorithm follows a simple rule to update weights based on errors. The update rule is as follows:

Δwi = η * (target – output) * xi

Where:

-

Δwiis the change in weight for inputi. -

ηis the learning rate, a hyperparameter that controls the size of weight updates. -

targetis the actual output or target value. -

outputis the predicted output. -

xiis the input value corresponding to weightwi.

Limitations of Perceptrons

While perceptrons are powerful in certain applications, they also have limitations that restrict their capabilities. Understanding these limitations is crucial for developing more sophisticated neural networks.

Linear Separability

One of the key limitations of perceptrons is their inability to learn non-linear patterns. Since the decision boundary of a perceptron is a hyperplane, it can only classify data that is linearly separable.

For datasets that are not linearly separable, perceptrons may struggle to converge and make accurate predictions. This limitation led to the development of more complex neural network architectures like multi-layer perceptrons.

Single Layer Perceptrons

A single-layer perceptron can only learn linear decision boundaries. In scenarios where data is not linearly separable, a single-layer perceptron fails to capture complex patterns and relationships.

To overcome this limitation, multi-layer perceptrons (MLPs) were introduced, allowing for the learning of non-linear functions through hidden layers and activation functions.

XOR Problem

The XOR (exclusive OR) problem is a classic example that highlights the limitations of single-layer perceptrons. The XOR function outputs true only when inputs are different, making it non-linearly separable.

A single-layer perceptron fails to learn the XOR function due to its linear decision boundary. However, by introducing additional hidden layers and activation functions, an MLP can successfully solve the XOR problem.

Applications of Perceptrons

Despite their limitations, perceptrons have found applications in various fields, laying the groundwork for more advanced neural network models. Understanding the practical uses of perceptrons can provide insights into their versatility.

Image Recognition

Perceptrons are widely used in image recognition tasks, where they can classify objects or detect patterns within images. By training perceptrons on large datasets, they can learn to identify features and distinguish between different objects.

In convolutional neural networks (CNNs), perceptrons are used in the convolutional layers to extract features from input images and pass them through activation functions for classification.

Natural Language Processing

Perceptrons play a crucial role in natural language processing (NLP) tasks such as sentiment analysis, text classification, and language translation. By processing input text data using perceptrons, models can analyze and understand human language patterns.

In recurrent neural networks (RNNs) and long short-term memory (LSTM) networks, perceptrons are integrated to process sequential data and generate context-aware predictions in NLP applications.

Robotics

Perceptrons have applications in robotics and autonomous systems, where they can make decisions based on sensory input and environmental cues. By training perceptrons to recognize objects, navigate obstacles, and interact with the environment, robots can perform complex tasks autonomously.

In reinforcement learning algorithms, perceptrons enable robots to learn from trial and error, adjust their actions, and optimize decision-making processes for efficient task completion.

Conclusion

In conclusion, perceptrons are fundamental building blocks in the field of artificial neural networks. While simple in structure, they serve as the foundation for more advanced neural network architectures and machine learning models.

By understanding how perceptrons work, their limitations, and applications, you can gain insights into the inner workings of neural networks and their real-world implications. Whether you’re diving into the world of artificial intelligence or exploring machine learning applications, grasping the concept of perceptrons is a valuable step in your journey towards mastering the realm of AI.