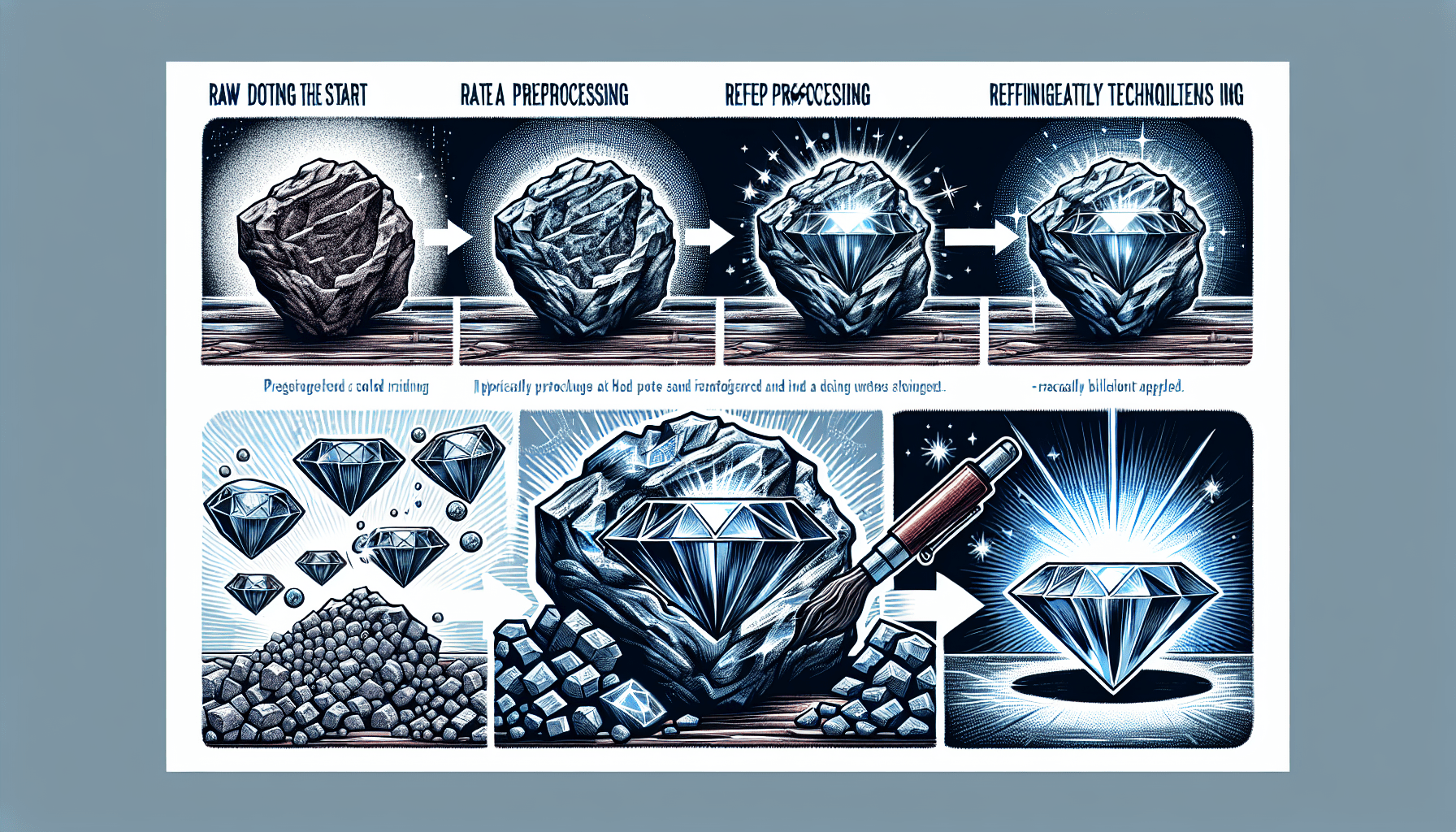

Data preprocessing is an essential step in the world of data analysis and machine learning. It involves transforming raw data into a format that is easier to work with and more suitable for analysis. But what exactly does it entail? In this article, we will demystify the concept of data preprocessing, breaking down the steps involved and explaining why it is crucial for obtaining accurate and meaningful insights from your data. So, strap in and get ready to unravel the mysteries of data preprocessing!

What is Data Preprocessing?

Data preprocessing is a crucial step in data analysis and machine learning that involves transforming raw data into a format that can be easily understood and analyzed by algorithms. It encompasses a range of techniques and processes aimed at cleaning, transforming, and integrating data to improve its quality and make it suitable for further analysis and modeling.

Importance of Data Preprocessing

Improving Data Quality

One of the main reasons why data preprocessing is important is that it helps improve the quality of the data. Raw data often contains errors, noise, and inconsistencies that can lead to inaccurate results. By removing or correcting these issues during the preprocessing stage, we can ensure that the data used for analysis is reliable and trustworthy.

Decreasing Data Inconsistencies

Data inconsistencies are a common problem in real-world datasets. This can occur due to various factors such as missing values, duplicate records, or discrepancies in formatting. Data preprocessing techniques enable us to identify and resolve these inconsistencies, ensuring that the data is consistent and reliable throughout the analysis process.

Enhancing Efficiency of Machine Learning Models

Data preprocessing plays a crucial role in enhancing the efficiency of machine learning models. While machine learning algorithms are powerful, they often require data to be in a specific format or range. Preprocessing techniques such as normalization, standardization, and scaling help transform the data to meet these requirements, making it easier for the models to learn patterns and make accurate predictions.

Data Cleaning

Removing Irrelevant Data

Data cleaning involves the removal of irrelevant or unnecessary data from the dataset. This could include variables that have no significant impact on the analysis or columns that contain mostly missing values. By eliminating these irrelevant data points, we can reduce noise in the dataset and improve the overall quality of the data.

Handling Missing Data

missing data is a common occurrence in real-world datasets. Data preprocessing techniques provide several approaches to handle missing data, including imputation methods and deletion strategies. Imputation methods involve filling in missing values with estimated values based on statistical techniques, while deletion strategies involve removing instances or variables with missing data. By addressing missing data, we can prevent biased analysis and ensure the integrity of our results.

Dealing with Duplicate Data

Duplicate data can significantly affect the accuracy and efficiency of data analysis. During the preprocessing stage, duplicate records can be identified and handled by techniques such as deduplication. By removing duplicate data, we can reduce the redundancy in our dataset, improve the efficiency of analysis, and ensure the reliability of our results.

Data Transformation

Normalization

Normalization is a data transformation technique that scales numeric variables to a specific range, typically between 0 and 1. It ensures that all variables have a similar scale, preventing one variable from dominating others during analysis. Normalization is particularly useful when dealing with features with different units or scales, allowing algorithms to work efficiently and consistently across the dataset.

Standardization

Standardization is another data transformation technique that centers the data around a mean of 0 and scales it by the standard deviation. By standardizing variables, we can make them more interpretable and comparable. Standardization is especially beneficial for algorithms that assume data is normally distributed or have distance-based calculations, such as clustering and support vector machines.

Scaling

Scaling refers to the process of transforming data to a specific range or distribution. It helps to ensure that all variables are on a similar scale, which is important for many machine learning algorithms. Scaling techniques such as min-max scaling and z-score scaling adjust the range of values within the dataset, making it easier for algorithms to process and analyze the data.

Binning

Binning is a technique used to transform continuous variables into discrete categories or bins. This can be useful when dealing with variables that have a large range of values or when we want to simplify the analysis by grouping similar values together. Binning can help reduce noise and improve the efficiency of analysis, especially when working with limited data or categorical variables.

Encoding Categorical Data

Categorical data refers to variables that represent discrete groups or categories. Most machine learning algorithms require numerical inputs, so categorical variables need to be encoded into numerical form during preprocessing. Common techniques for encoding categorical data include one-hot encoding, label encoding, and ordinal encoding. By transforming categorical variables into a numerical representation, we can include them in our analysis and enable machine learning algorithms to work with these features effectively.

Feature Selection

Filter Methods

Filter methods for feature selection involve selecting features based on their inherent properties and statistical measures. These methods do not rely on the specific machine learning algorithm being used and evaluate features independently of each other. Common filtering techniques include correlation-based feature selection, chi-square tests, and information gain. Filter methods are computationally efficient and can quickly identify relevant features.

Wrapper Methods

Wrapper methods consider the predictive power of features by evaluating subsets of features using a specific machine learning algorithm. These methods assess the performance of the model with different combinations of features, which can be time-consuming but tend to yield better results. Techniques such as forward selection, backward elimination, and recursive feature elimination fall under the category of wrapper methods.

Embedded Methods

Embedded methods for feature selection incorporate feature selection within the learning algorithm itself. These methods select features during the training process based on their contribution to the model’s performance. Examples of embedded methods include Lasso regression, decision tree-based feature selection, and regularization techniques. Embedded methods are particularly useful when the number of features is large, as they can handle feature selection and model training simultaneously.

Feature Extraction

Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a widely used technique for feature extraction. It transforms a dataset with potentially correlated variables into a new set of uncorrelated variables called principal components. These principal components capture the maximum amount of information present in the original dataset while reducing its dimensionality. PCA is helpful when dealing with high-dimensional data, enabling efficient analysis and visualization.

Linear Discriminant Analysis (LDA)

Linear Discriminant Analysis (LDA) is a feature extraction technique commonly used for classification problems. LDA aims to find a linear combination of features that maximizes the separability between different classes while minimizing the within-class variance. By projecting data onto this linear discriminant subspace, LDA can identify features that discriminate well between classes and enhance the performance of classification models.

Factor Analysis

Factor Analysis is a statistical method used to uncover underlying latent factors or dimensions from a set of observed variables. It aims to explain the correlation structure within the dataset by grouping variables that covary together. Factor analysis helps in reducing dimensionality, identifying underlying constructs, and simplifying the interpretation of complex datasets.

T-SNE

t-Distributed Stochastic Neighbor Embedding (t-SNE) is a dimensionality reduction technique primarily used for visualization. It maps high-dimensional data to a low-dimensional space, preserving the local structure and capturing complex relationships between data points. t-SNE is particularly effective in visualizing clusters and identifying patterns in the data, making it a valuable tool for exploratory data analysis.

Dimensionality Reduction

Curse of Dimensionality

The curse of dimensionality refers to the challenges and issues that arise when working with high-dimensional datasets. As the number of dimensions or features increases, the data becomes increasingly sparse, making it difficult to find meaningful patterns and relationships. The curse of dimensionality can lead to overfitting, increased computational complexity, and reduced performance of machine learning algorithms. Dimensionality reduction techniques aim to alleviate these problems by reducing the number of features while preserving the relevant information.

Techniques for Dimensionality Reduction

Several techniques are available for dimensionality reduction, including:

- Principal Component Analysis (PCA): PCA identifies orthogonal axes that capture maximum variance in the data, allowing for dimensionality reduction by selecting a subset of principal components.

- Linear Discriminant Analysis (LDA): LDA finds a linear subspace that maximizes class separability, making it useful for dimensionality reduction in classification problems.

- Feature Selection: As discussed earlier, feature selection techniques can also be used for dimensionality reduction by selecting a subset of relevant features.

- Manifold Learning: Manifold learning techniques, such as Isomap, Locally Linear Embedding (LLE), and t-SNE, map high-dimensional data onto a lower-dimensional manifold while preserving the local neighborhood relationships.

By employing these dimensionality reduction techniques, we can overcome the challenges posed by high-dimensional data and improve the efficiency and accuracy of our analysis.

Data Integration

Combining Multiple Datasets

Data integration involves combining multiple datasets into a single dataset to facilitate analysis and modeling. This is often necessary when data from different sources or domains needs to be merged for a comprehensive analysis. Data integration techniques can include data concatenation, joining, or merging based on common variables or keys. By integrating data, we can gain a holistic view of the problem, uncover hidden relationships, and make more informed decisions.

Resolving Data Conflicts

When integrating data from multiple sources, conflicts and inconsistencies may arise due to differences in data formats, naming conventions, or data semantics. Data preprocessing techniques help resolve these conflicts by standardizing variable names, resolving discrepancies in data types, and handling missing or duplicate records. By addressing data conflicts, we can ensure the consistency and accuracy of the integrated dataset and prevent biases in subsequent analysis.

Data Sampling

Undersampling

Undersampling is a data sampling technique that aims to balance imbalanced datasets by reducing the number of instances from the majority class(es). This involves randomly selecting a subset of instances from the majority class(es) to match the number of instances in the minority class. Undersampling can help prevent bias towards the majority class(es) and improve the performance of machine learning models on imbalanced datasets.

Oversampling

Oversampling is a data sampling technique that addresses the issue of imbalanced datasets by increasing the number of instances in the minority class. This can be done by replicating instances from the minority class or generating synthetic data points using techniques such as SMOTE (Synthetic Minority Oversampling Technique). Oversampling helps to ensure that the minority class is adequately represented and improves the performance of models on imbalanced datasets.

Stratified Sampling

Stratified sampling is a sampling technique that ensures representative sampling across different groups or categories within the dataset. This technique divides the dataset into homogeneous subgroups or strata based on a specific variable and then samples from each stratum proportionally to its representation in the overall population. Stratified sampling helps to maintain the distribution of the target variable and ensures that each subgroup is adequately represented in the sample.

Data Splitting

Training, Validation, and Test Sets

Data splitting is a crucial step in model development and evaluation. It involves dividing the dataset into three distinct subsets: the training set, the validation set, and the test set. The training set is used to train the model, the validation set is used to tune model parameters and select the best model, and the test set is used to evaluate the final model’s performance. Data splitting helps to assess model generalization, prevent overfitting, and provide unbiased estimates of model performance.

Cross-Validation

Cross-validation is a resampling technique used to assess the performance of a model and estimate its generalization ability. It involves dividing the dataset into multiple folds or subsets, training the model on a subset, and validating it on the remaining subsets. This process is repeated multiple times, with each subset serving as both the training and validation set. Cross-validation provides a more robust estimate of model performance by reducing the variance associated with a single train-test split and helps in selecting the most suitable model for deployment.

In conclusion, data preprocessing is a critical step in the data analysis and machine learning pipeline. It involves a range of techniques, from data cleaning and transformation to feature selection and extraction, dimensionality reduction, data integration, sampling, and data splitting. By properly preprocessing and preparing the data, we can enhance the quality and reliability of analysis, improve the performance of machine learning models, and gain valuable insights from the data.