Welcome to an exploration of the topic of k-means clustering! In this article, you will gain a better understanding of what k-means clustering is and how it can be utilized in data analysis. K-means clustering is a popular algorithm used in machine learning to group data points into clusters based on their similarities. By the end of this article, you will have a clearer grasp of how k-means clustering works and the benefits it can bring to your data analysis tasks. Let’s dive in and uncover the world of k-means clustering together!

Have you ever wondered how machines group data?

Do you ever look at a large dataset with thousands of data points and wonder how all that information can be organized effectively? That’s where clustering algorithms like k-means come in handy. In this article, we will explore what k-means clustering is, how it works, and how you can apply it to different scenarios. Get ready to dive into the world of data analysis with k-means clustering!

What is k-means clustering?

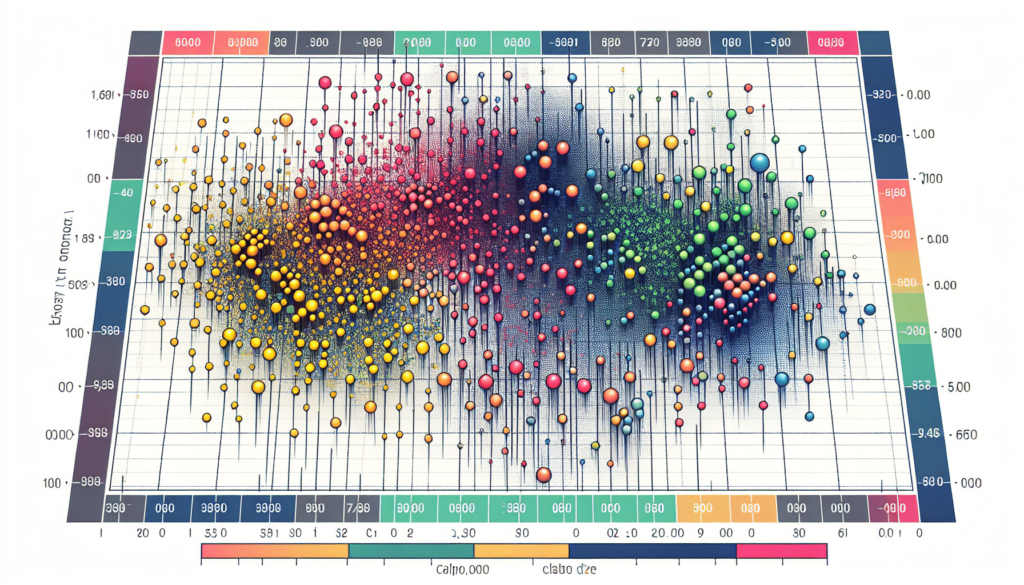

K-means clustering is a popular unsupervised machine learning algorithm used for partitioning a given dataset into K clusters. The main idea behind k-means clustering is to group data points together based on their similarity.

How does k-means clustering work?

The algorithm works by iteratively assigning data points to clusters and computing the centroid of each cluster. The centroid of a cluster is the mean of all the data points in that cluster.

Let’s break down the process of k-means clustering into simple steps:

- Initialization: Choose the number of clusters K and randomly initialize the centroids of the K clusters.

- Assignment: Assign each data point to the nearest centroid based on a distance metric, commonly Euclidean distance.

- Update: Update the centroid of each cluster by computing the mean of all the data points assigned to that cluster.

- Repeat: Repeat steps 2 and 3 until the centroids no longer change significantly or a predefined number of iterations is reached.

How to choose the right value of K?

One of the challenges of using k-means clustering is determining the optimal number of clusters K. Choosing the wrong value of K can lead to suboptimal clustering results.

There are several methods to help determine the optimal value of K, such as the elbow method, silhouette score, and gap statistics. Experimenting with different values of K and evaluating the clustering results using these methods can help you find the right number of clusters for your dataset.

The advantages of k-means clustering

K-means clustering has several advantages that make it a popular choice for clustering large datasets:

- Efficiency: K-means is computationally efficient and can handle large datasets with ease.

- Scalability: The algorithm is scalable to large datasets and can be parallelized for faster processing.

- Ease of implementation: K-means is easy to implement and understand, making it accessible to beginners in machine learning.

- Versatility: The algorithm can be applied to various types of datasets and is robust to outliers.

Real-world applications of k-means clustering

K-means clustering is used in a wide range of applications across different industries. Some common applications include:

- Customer segmentation: Businesses use k-means clustering to group customers based on their purchasing behavior and demographics.

- Image segmentation: In image processing, k-means clustering is used to partition an image into different regions for further analysis.

- Anomaly detection: K-means clustering can help identify outliers or anomalies in a dataset by grouping them into separate clusters.

Limitations of k-means clustering

While k-means clustering has its advantages, there are also some limitations to be aware of when using this algorithm:

- Sensitivity to initialization: K-means clustering is sensitive to the initial placement of centroids, which can lead to suboptimal clustering results.

- Cluster shape: The algorithm assumes that clusters are spherical and of similar size, which may not always hold true in real-world datasets.

- Number of clusters: Choosing the right number of clusters K can be challenging and may require domain knowledge.

Tips for improving k-means clustering results

To overcome some of the limitations of k-means clustering and improve the quality of clustering results, consider the following tips:

- Feature scaling: Normalize or standardize your data to ensure that all features have equal importance in the clustering process.

- Feature selection: Use dimensionality reduction techniques to select relevant features and reduce noise in the dataset.

- Initialization methods: Experiment with different initialization techniques, such as K-means++ or mini-batch k-means, to improve the robustness of the algorithm.

- Outlier detection: Identify and remove outliers from the dataset before applying k-means clustering to prevent them from affecting the clustering results.

Conclusion

In conclusion, k-means clustering is a powerful algorithm for grouping data points into clusters based on their similarity. By understanding how k-means clustering works, choosing the right value of K, and considering its advantages and limitations, you can effectively apply this algorithm to various real-world scenarios. Experiment with k-means clustering on your own datasets and discover the insights waiting to be uncovered!