Imagine you’re standing at the crossroads of technology and biology, peering into the fascinating realm of artificial intelligence. In this captivating journey, you’ll uncover the secrets of the perceptron – an elusive concept that holds the key to machine learning. Delve into the intricate workings of this powerful algorithm as we demystify its function and unveil its remarkable features. Marvel at its ability to understand patterns and make decisions, just like a human brain. Get ready to unlock the mysteries of the perceptron and navigate the thrilling landscape of AI. The world of tomorrow awaits, and the perceptron is your trusty guide.

Introduction to Perceptron

Definition of perceptron

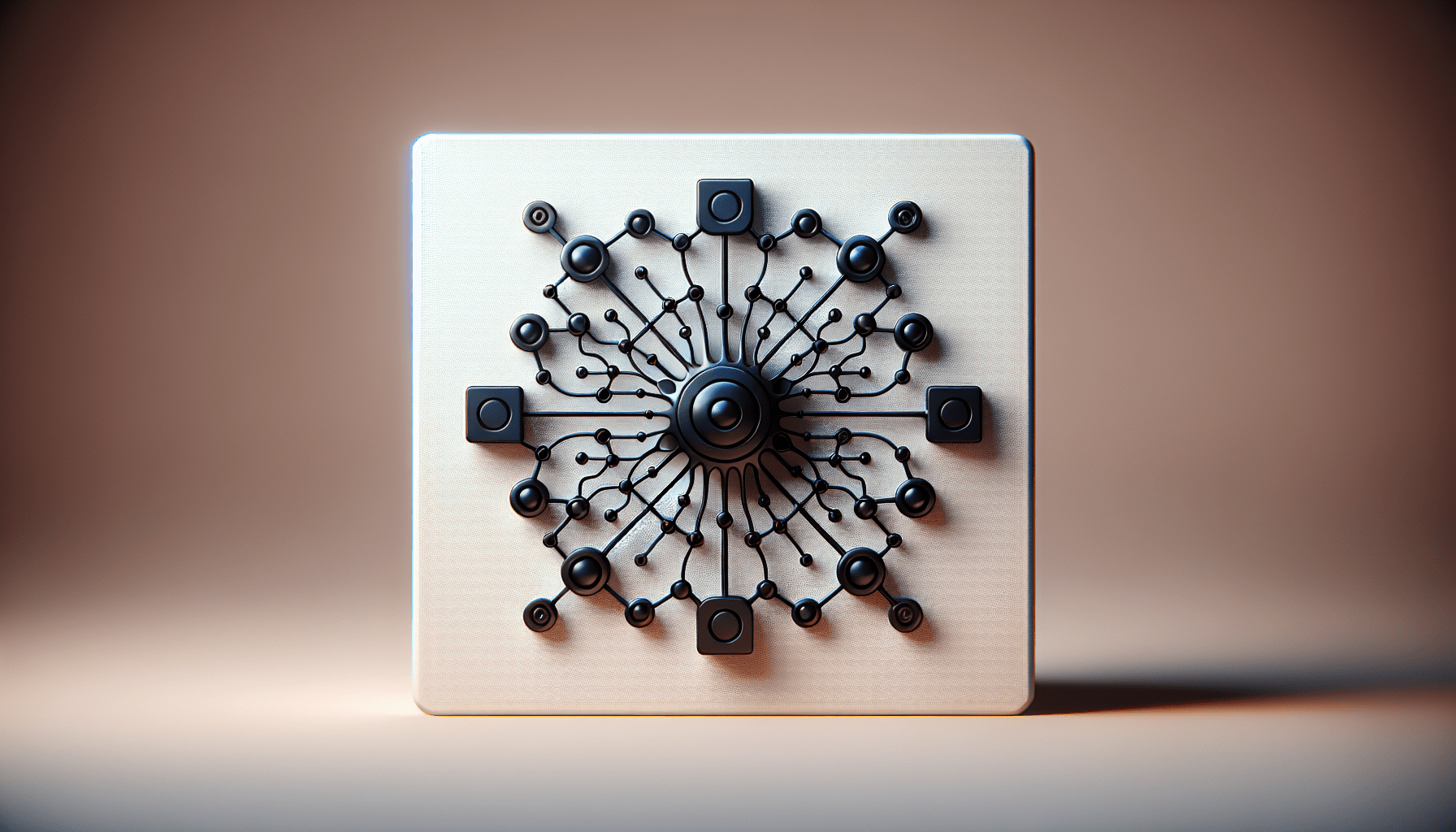

A perceptron is a basic computational unit in Artificial Neural Networks (ANN) that is modeled after the human brain’s neural networks. It is a binary classifier and one of the simplest forms of neural networks used for machine learning tasks. The perceptron takes a set of inputs and produces an output based on the weighted sum of those inputs.

History of perceptron

The perceptron was first developed in the late 1950s by Frank Rosenblatt, an American psychologist and computer scientist. Rosenblatt’s work on perceptrons laid the foundation for the field of artificial intelligence and machine learning. He aimed to create a algorithm that could learn and make decisions based on sensory inputs, similar to how humans do.

Purpose of perceptron

The purpose of the perceptron is to perform binary classification tasks by learning from examples provided during the training phase. It is used to solve problems that involve pattern recognition, classification, and prediction. The perceptron has proven to be a powerful tool in various fields such as image and speech recognition, natural language processing, and predictive modeling.

Working Principle

Basic concept of perceptron

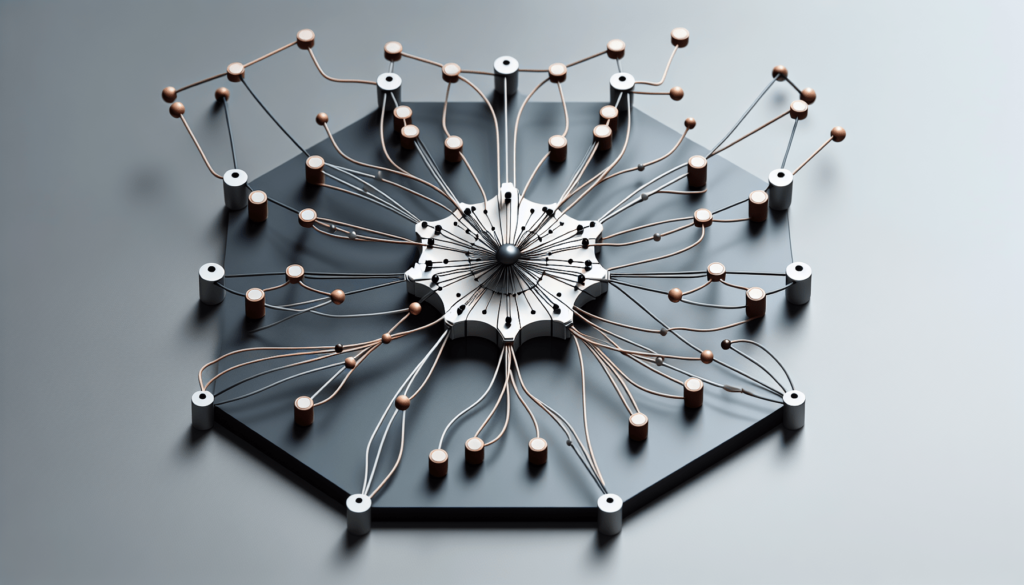

The basic concept of a perceptron is inspired by how neurons in the human brain work. It consists of multiple inputs, each with an associated weight, which are summed together. The sum is then passed through an activation function to produce an output. The perceptron essentially decides whether the input belongs to one class or another based on the calculated output.

Input and output layers

A perceptron typically consists of an input layer and an output layer. The input layer receives the features or inputs, which can be numerical or binary values. The output layer produces the final classification output, which is usually a binary value representing the predicted class.

Weights and Bias

Each input to the perceptron is assigned a weight, which determines its influence on the output. The weights are adjusted during the training phase to optimize the classification accuracy. Additionally, a bias term is added to the perceptron to account for the threshold of activation. The bias allows the perceptron to learn even when the inputs are all zero.

Activation function

The activation function is a crucial component of a perceptron. It determines whether the perceptron should fire or remain inactive based on the weighted sum of inputs and the bias. The most commonly used activation function in perceptrons is the step function, which outputs 1 if the sum exceeds a threshold value, and 0 otherwise.

Feedforward process

The feedforward process in a perceptron involves calculating the weighted sum of inputs and passing it through the activation function to generate an output. During this process, each input is multiplied by its corresponding weight, summed up, and then adjusted by the bias term. The result is then passed through the activation function to determine the final output.

Weight adjustment process

The weight adjustment process is a crucial step in training the perceptron. It involves updating the weights based on the error between the predicted output and the desired output. By iteratively adjusting the weights, the perceptron fine-tunes its decision-making process to improve accuracy.

Termination condition

The termination condition in perceptron training specifies when the learning process should be stopped. This condition can be defined based on the number of iterations, the error rate, or the convergence of the weights. By setting an appropriate termination condition, the perceptron can achieve optimal performance without overfitting the data.

Training the Perceptron

Importance of training

Training is a critical phase in the perceptron’s lifecycle, as it equips the network with the ability to make accurate predictions or classifications. Through training, the perceptron learns to adjust its weights and biases to minimize the error between predicted outputs and the actual outputs, thereby improving its performance.

Supervised learning

The perceptron employs supervised learning, where it is provided with labeled examples during the training phase. These examples consist of input features along with their corresponding target outputs. By comparing the predicted outputs with the expected outputs, the perceptron adjusts its parameters to minimize the error.

Backpropagation algorithm

The backpropagation algorithm is a widely-used method for training perceptrons. It involves propagating the error from the output layer back to the input layer and updating the weights based on the gradient of the error function. Backpropagation allows the perceptron to adjust its weights efficiently, making it capable of solving complex problems.

Gradient descent

Gradient descent is an optimization algorithm used in conjunction with backpropagation to update the weights of a perceptron. It calculates the gradient of the error function with respect to the weights and biases, and adjusts them in the direction of steepest descent. This iterative process helps the perceptron converge to a local minimum of the error function.

Epochs and learning rate

During training, the perceptron is exposed to multiple iterations, known as epochs. In each epoch, the perceptron processes the entire training dataset to update its weights and biases. The learning rate is a parameter that determines the step size in weight adjustments. A higher learning rate can lead to faster convergence, but may also result in overshooting the optimal weights.

Applications of Perceptron

Pattern recognition

Perceptrons are widely used for pattern recognition tasks. They excel at identifying and classifying patterns in data, making them valuable in applications such as detecting fraud, recognizing handwritten characters, and identifying medical conditions based on symptoms.

Image and speech recognition

Perceptrons have found extensive use in image and speech recognition systems. They can be trained to recognize specific objects or spoken words by analyzing the patterns and features within the input data. Perceptron-based image and speech recognition systems have contributed to advancements in fields like autonomous vehicles, virtual assistants, and security systems.

Natural language processing

In natural language processing, perceptrons are utilized for tasks such as sentiment analysis, text classification, and named entity recognition. By analyzing the semantic and syntactic features of textual data, perceptrons can accurately determine sentiments, categorize documents, and extract relevant information from text.

Classifying data

The ability of perceptrons to classify data based on learned patterns makes them valuable in various classification tasks. They can be used to classify data points into different categories, such as spam detection in emails, credit risk assessment, and predicting customer churn in businesses.

Predictive modeling

Perceptrons are also used for predictive modeling, where they learn to make predictions based on historical data. By analyzing the relationships between input features and target outputs, perceptron-based models can forecast future trends, recommend products, and optimize resource allocation in fields like finance, marketing, and healthcare.

Advantages of Perceptron

Simplicity and interpretability

One of the major advantages of perceptrons is their simplicity and interpretability. The perceptron’s architecture and operation can be easily understood, making it accessible to both experts and newcomers in machine learning. Additionally, the weights and biases in a trained perceptron can provide valuable insights into the importance and influence of different input features.

Ability to handle complex problems

While perceptrons are conceptually simple, they have the ability to handle complex problems. By utilizing multiple layers of perceptrons or with the help of deeper neural network architectures, perceptrons can tackle tasks that require more sophisticated decision-making, such as image recognition in deep learning models.

Parallel processing capabilities

Perceptrons are inherently parallel processors, which means they can perform computations simultaneously. This parallel processing capability makes perceptrons well-suited for implementing algorithms on parallel hardware architectures, leading to faster and more efficient processing of large datasets.

Real-time processing

Perceptrons can offer real-time processing capabilities due to their fast calculations and optimized architecture. This makes perceptron-based systems ideal for applications that require immediate responses, such as real-time monitoring, control systems, and autonomous decision-making.

Limitations of Perceptron

Linearity assumption

One of the limitations of perceptrons is that they assume linearity in the input data. If the relationship between inputs and outputs is non-linear, perceptrons may struggle to capture the underlying patterns accurately. However, this limitation can be overcome by using more advanced neural network architectures that incorporate non-linear activation functions or by combining multiple perceptrons.

Limited ability for complex tasks

While perceptrons can handle many tasks, they have limitations when it comes to solving complex problems. For instance, tasks requiring long-term memory or handling sequential data may require more sophisticated architectures like recurrent neural networks. Perceptrons alone may struggle to capture the temporal dependencies and nuances in such tasks.

Susceptibility to noise

Perceptrons are sensitive to noise in input data. Even small variations or errors in the input can lead to significant differences in the output. This makes it essential to pre-process the input data and ensure that it is clean and accurate. Techniques like feature scaling and outlier detection can help mitigate the impact of noise.

Dependence on training data quality

The performance of a perceptron heavily relies on the quality and representativeness of the training data. If the training data is biased, unrepresentative, or insufficient, the perceptron may have difficulty generalizing to new, unseen data. It is crucial to carefully curate and prepare the training dataset to ensure optimal performance and generalization capability.

Comparison with Other Neural Networks

Differences with multilayer perceptron

The perceptron, with a single layer, is fundamentally different from the multilayer perceptron (MLP) with hidden layers. Unlike perceptrons, MLPs can handle complex non-linear relationships by employing activation functions and multiple layers of interconnected perceptrons. MLPs excel at learning complex patterns and have broader application capabilities compared to single-layer perceptrons.

Comparison with radial basis function network

Radial Basis Function (RBF) networks differ from perceptrons in terms of their architecture and learning approach. RBF networks use Gaussian approximate functions instead of step functions as activation functions. RBF networks are efficient in solving pattern recognition problems, especially ones with radial symmetry, while perceptrons are more general-purpose classifiers.

Contrast with recurrent neural networks

Recurrent Neural Networks (RNNs) are specialized neural networks designed to handle sequential data and maintain memory of past inputs. Unlike traditional perceptrons, RNNs have feedback connections that enable them to capture temporal dependencies. RNNs are commonly used in tasks like language modeling, speech recognition, and time series prediction.

Recent Developments in Perceptron

Deep learning and neural networks

Deep learning, a subfield of machine learning, has brought significant advancements to perceptron-based models. Deep neural networks, consisting of multiple layers of interconnected perceptrons, have revolutionized many domains, including computer vision, natural language processing, and speech recognition. Deep learning has expanded the capabilities of perceptrons by enabling them to learn high-level representations.

Advancements in activation functions

The choice of activation function plays a crucial role in the performance of a perceptron. Over the years, various activation functions beyond the simple step function have been developed. Functions like sigmoid, hyperbolic tangent, and rectified linear unit (ReLU) have gained popularity due to their ability to model non-linear relationships more effectively. These advancements have improved the learning capacity of perceptrons.

Improved training algorithms

Researchers have developed new training algorithms that enhance the efficiency and performance of perceptrons. Algorithms such as Adaptive Moment Estimation (Adam), Stochastic Gradient Descent (SGD), and RMSprop have improved the speed and convergence of perceptron training, making the learning process more efficient and effective.

Hardware accelerations

Advancements in hardware technologies, such as graphics processing units (GPUs) and specialized neural network accelerators, have accelerated the training and inference processes of perceptron-based models. These hardware accelerations increase computing power and enable more significant and complex networks to be trained and deployed efficiently.

Future Trends

Enhancements in perceptron architecture

In the future, perceptron architectures are likely to be further enhanced to handle more complex problems. Researchers will focus on improving the activation functions, exploring new network structures, and incorporating advanced techniques like attention mechanisms and memory modules. These enhancements will allow perceptrons to tackle a broader range of tasks and improve their overall performance.

Integration with other artificial intelligence frameworks

As perceptrons continue to evolve, they are likely to be integrated with other artificial intelligence frameworks, such as reinforcement learning and generative models. Combining the strengths of different techniques will enable more intelligent and versatile systems that can learn, reason, and make decisions in complex environments.

Exploration of hybrid models

Hybrid models combining perceptrons with other machine learning techniques, such as support vector machines, genetic algorithms, and fuzzy logic, are expected to be explored in the future. These hybrid models can leverage the strengths of different approaches to improve performance in specific domains or tasks.

Applications in emerging technologies

Perceptrons are anticipated to find applications in emerging technologies such as autonomous vehicles, robotics, and Internet of Things (IoT). Their ability to process real-time data and make fast decisions makes them well-suited for these domains. Perceptron-based systems can contribute to advancements in self-driving cars, intelligent robotics, and smart city infrastructure.

Conclusion

In conclusion, perceptrons are a fundamental component of artificial neural networks and have played a significant role in the development of the field of machine learning. Their simplicity, interpretability, and ability to handle a wide range of tasks make them a valuable tool in various domains. Perceptrons’ basic working principles, training process, and applications have been discussed in this comprehensive article. Understanding and harnessing the power of perceptrons can unlock new possibilities for solving problems, making predictions, and advancing artificial intelligence.