Imagine a world where machines can not only process vast amounts of information but also learn and make decisions on their own. This captivating article explores the concept of neural networks, shedding light on what they are and how they work. Through this friendly introduction, you’ll embark on an exciting journey to uncover the mysteries behind this remarkable technology that has been revolutionizing various industries. So, fasten your seatbelt and get ready to dive into the fascinating world of neural networks!

Definition of Neural Network

A neural network is a computational model inspired by the structure and function of the human brain. It is a system of interconnected artificial neurons, designed to process and analyze complex information, recognize patterns, and make decisions based on those patterns. Neural networks have the ability to learn and improve their performance over time, making them a powerful tool in various fields such as artificial intelligence, machine learning, and data analysis.

Historical Background

Early Developments

The concept of artificial neural networks dates back to the 1940s when Warren McCulloch and Walter Pitts developed the first mathematical model of a neuron. They proposed that a simple binary logic gate could be implemented using a network of interconnected artificial neurons. This idea paved the way for the development of more sophisticated neural network models.

Artificial Neural Networks (ANNs)

In the 1950s and 1960s, the field of artificial neural networks experienced significant advancements. Researchers such as Frank Rosenblatt and Bernard Widrow developed perceptrons, which are single-layer neural networks capable of performing pattern recognition tasks. However, perceptrons had limitations in solving complex problems, leading to a decline in interest in neural networks.

Deep Neural Networks (DNNs)

The resurgence of neural networks came in the 1980s with the introduction of multilayer neural networks, also known as deep neural networks. These networks had multiple layers of interconnected neurons, enabling them to learn hierarchical representations of data and solve more complex problems. This breakthrough, combined with the availability of larger datasets and more powerful computing resources, led to rapid advancements in the field of neural networks.

Basic Components of a Neural Network

Neurons

Neurons are the fundamental building blocks of a neural network. They receive input from other neurons, process the input, and produce an output. Each neuron is connected to multiple other neurons through weighted connections, allowing for the flow of information throughout the network.

Weights

Weights are assigned to the connections between neurons in a neural network. These weights determine the strength of the connection and have a significant impact on the output of the neuron. During the training phase, the weights are adjusted to optimize the performance of the network.

Bias

Bias is an additional parameter in a neural network that allows the network to make non-zero outputs even when all input values are zero. It provides flexibility in the output of the network by introducing a certain level of bias towards a particular outcome.

Activation Function

The activation function determines the output of a neuron based on the weighted sum of its inputs. It introduces nonlinearity into the network, allowing the neural network to model complex relationships between inputs and outputs.

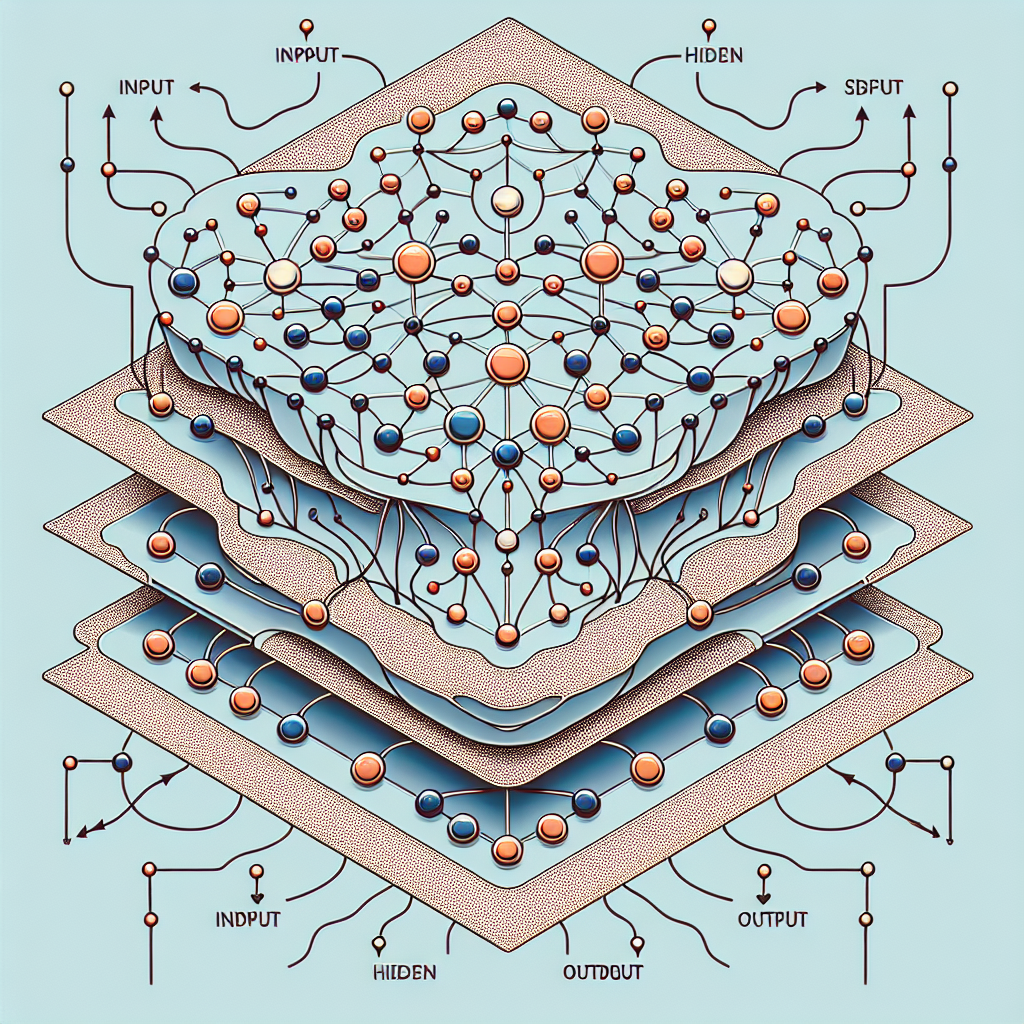

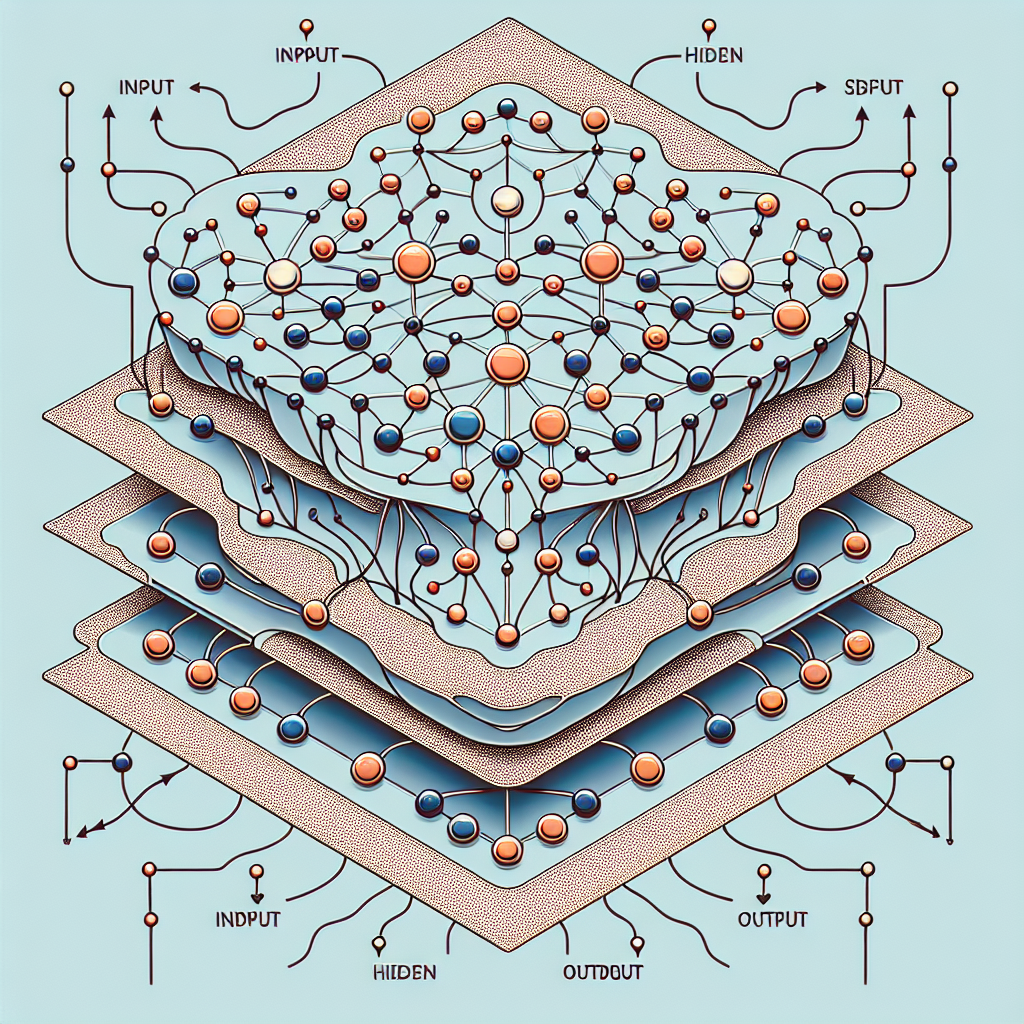

Layers

Neural networks consist of multiple layers of interconnected neurons. The input layer receives the initial input data, which is then passed through one or more hidden layers before reaching the output layer. Each layer in the network performs a specific computation and contributes to the overall output of the network.

Types of Neural Networks

Feedforward Neural Networks

Feedforward neural networks are the simplest type of neural networks, where the information flows in only one direction, from the input layer to the output layer. They are used for tasks such as classification, regression, and pattern recognition.

Recurrent Neural Networks

Recurrent neural networks (RNNs) have connections between neurons that form directed cycles, allowing them to retain information from previous computations. This makes them suitable for tasks that involve sequential or time-dependent data, such as speech recognition and natural language processing.

Convolutional Neural Networks

Convolutional neural networks (CNNs) are primarily used for image classification and object detection tasks. They leverage the concept of convolution to extract features from input data, making them highly effective in analyzing visual information.

Radial Basis Function Networks

Radial Basis Function Networks (RBFNs) are typically used for function approximation and regression tasks. They rely on the concept of radial basis functions to model complex relationships between inputs and outputs.

Self-Organizing Maps

Self-Organizing Maps (SOMs) are unsupervised neural networks that can learn to classify and cluster similar patterns in input data. They are commonly used for tasks such as visualization, dimensionality reduction, and data clustering.

Generative Adversarial Networks

Generative Adversarial Networks (GANs) are composed of two neural networks: a generator network and a discriminator network. The generator network learns to generate synthetic data that resembles the real data, while the discriminator network learns to distinguish between the real and fake data. GANs are popular for tasks such as image generation and data synthesis.

Working Principle of a Neural Network

Data Input

In a neural network, data is provided as input to the network. This data can be in the form of numeric values, images, text, or any other suitable format depending on the task at hand.

Weighted Sum Calculation

Each neuron in the network receives input from multiple neurons, and each input is multiplied by a corresponding weight value. The weighted inputs are then summed together to calculate a weighted sum for each neuron.

Activation Function Application

The weighted sum calculated for each neuron is then passed through an activation function, which introduces nonlinearity into the network. The activation function determines the output of the neuron based on the weighted sum of its inputs.

Propagation

The output of each neuron in a layer serves as input to the neurons in the next layer. This process is repeated layer by layer until the output layer is reached. The flow of information from one layer to the next is known as forward propagation.

Training

During the training phase, the weights and biases of the neural network are adjusted through a process called backpropagation. Backpropagation involves calculating the error between the network’s output and the expected output, propagating this error backward through the network, and adjusting the weights and biases to minimize the error. This iterative process continues until the network’s performance reaches a satisfactory level.

Applications of Neural Networks

Image and Pattern Recognition

Neural networks have revolutionized image and pattern recognition tasks. They are widely used in applications such as facial recognition, object detection, and image classification. With their ability to learn and recognize complex patterns, neural networks have achieved remarkable accuracy levels in these tasks.

Natural Language Processing

Neural networks have shown great promise in natural language processing tasks, such as sentiment analysis, machine translation, and text generation. They can process and understand human language, enabling applications like chatbots, virtual assistants, and voice recognition systems.

Speech Recognition

Neural networks have led to significant advancements in speech recognition technology. They can convert spoken language into written text, making voice-based applications and devices more accessible and user-friendly. Speech recognition systems powered by neural networks can be found in virtual assistants, transcription services, and voice-controlled devices.

Predictive Analytics

Neural networks are widely used in predictive analytics, where historical data is analyzed to make predictions about future outcomes. They have been successful in applications such as sales forecasting, stock market prediction, and demand forecasting.

Data Mining

Neural networks play a crucial role in data mining, a process of discovering patterns and extracting useful information from large datasets. They can uncover hidden relationships and trends in data, helping organizations make efficient and informed decisions.

Robotics

Neural networks have made significant contributions to the field of robotics. They are used for tasks such as object recognition, motion planning, and control. Neural networks enable robots to perceive their environment, make decisions, and perform complex tasks more efficiently and autonomously.

Medicine

Neural networks have immense potential in the field of medicine. They are used for tasks such as disease diagnosis, drug discovery, and medical image analysis. By analyzing large amounts of medical data, neural networks can assist in early detection of diseases, improve treatment outcomes, and aid in medical research.

Finance

Neural networks have found applications in various aspects of finance, including stock market prediction, credit scoring, and fraud detection. They can analyze large volumes of financial data and identify patterns and anomalies that may not be apparent to human analysts. Neural networks help financial institutions make better financial decisions and mitigate risks.

Advantages of Neural Networks

Parallel Processing

Neural networks can perform computations in parallel, leveraging the power of multiple processors or graphics processing units (GPUs). This allows for faster processing of large amounts of data and enables real-time applications.

Nonlinear Mapping

Neural networks are capable of learning and modeling complex nonlinear relationships between inputs and outputs. Unlike traditional statistical methods, neural networks can capture intricate patterns and make accurate predictions in highly nonlinear data.

Adaptability

Neural networks have the ability to adapt and improve their performance based on experience. Through the process of training and adjusting the network’s weights, it can learn from examples and adjust its internal parameters to produce better results.

Fault Tolerance

Neural networks are resilient to faults and partial failures. Due to their distributed nature and interconnected structure, they can continue to function even if some neurons or connections are damaged or fail. This fault tolerance makes neural networks robust in real-world scenarios.

Prediction Accuracy

Neural networks have demonstrated high prediction accuracy in various tasks, surpassing traditional statistical models in many cases. Their ability to learn complex patterns and process vast amounts of data allows them to make accurate predictions and improve decision-making.

Limitations of Neural Networks

Training Time

Training a neural network can be computationally intensive and time-consuming, especially for large and deep networks. The process of adjusting the weights and biases through backpropagation requires multiple iterations, and training time can increase significantly with the complexity of the network and the size of the dataset.

Overfitting

Neural networks are prone to overfitting, where the network becomes too specialized in the training data and fails to generalize well to unseen data. Overfitting can occur when the network is overly complex or when there is a lack of diverse training examples. Techniques such as regularization and early stopping are often used to mitigate overfitting.

Lack of Interpretability

Neural networks are often considered black boxes, as it can be challenging to interpret the reasoning behind the network’s decision-making. Understanding the internal workings of a neural network and explaining its decisions can be difficult, particularly with deep neural networks.

Need for Large Datasets

Neural networks typically require large amounts of labeled training data to learn effectively. Gathering and labeling large datasets can be time-consuming and expensive. In domains where labeled data is scarce, neural networks may face limitations in their ability to generalize and produce accurate results.

Current Developments and Future Trends

Deep Learning

Deep learning, a subset of neural networks, has gained significant popularity in recent years. It involves training deep neural networks with multiple hidden layers, enabling them to learn hierarchical representations of data. Deep learning has achieved remarkable results in various domains such as computer vision, natural language processing, and speech recognition.

Explainable AI

As neural networks become more prevalent in critical applications, there is a growing demand for explainable AI. Researchers are working on developing techniques to make neural networks more transparent and interpretable, allowing users to understand the factors influencing the network’s decision-making.

Neuromorphic Computing

Neuromorphic computing aims to design computer systems that mimic the structure and function of the human brain. By emulating the neural architecture and information processing principles of the brain, neuromorphic computing has the potential to achieve low power consumption, high computational efficiency, and brain-like cognitive abilities.

Transfer Learning

Transfer learning is a technique that allows neural networks to leverage knowledge gained from one task or domain to improve performance on a different but related task. By transferring learned features and representations, neural networks can overcome limitations of small datasets and expedite the training process.

Conclusion

Neural networks have come a long way since their early developments and have emerged as a powerful tool in the field of artificial intelligence and machine learning. They have found applications in various domains such as image and pattern recognition, natural language processing, and predictive analytics. While they offer advantages such as parallel processing, adaptability, and fault tolerance, they also face limitations such as training time, overfitting, and lack of interpretability. However, ongoing developments in deep learning, explainable AI, neuromorphic computing, and transfer learning signify a promising future for neural networks. As researchers continue to push the boundaries of this technology, neural networks are expected to play an increasingly significant role in shaping the future of intelligent systems.